Agentic AI is in desperate need of some Orchestration

Also, it turns out that people are the worlds’ first Intelligent Agents

Author’s Note: I broke this into two posts for length. A more general overview of CamundaCon will come in a separate post over the next week or two.

I had a great conference experience out at CamundaCon 2025 in Amsterdam. Once again they picked an historic venue, the Beurs van Berlage - built to support commodity trading between 1896 and 1903, it is a venue with soaring ceilings and balconies - a great venue to talk about soaring ambitions of a certain process orchestration software company.

Agentic Orchestration

What I walked away from the conference with was one new idea, that’s really much more powerful than it first appears. What if you could have a conversation with your process? What if I could keep the guard rails of a good business process around my LLM-based AI agent to keep it from doing something crazy? What if I had all the power of process orchestration, and all the power of LLMs, and none of the security risks?

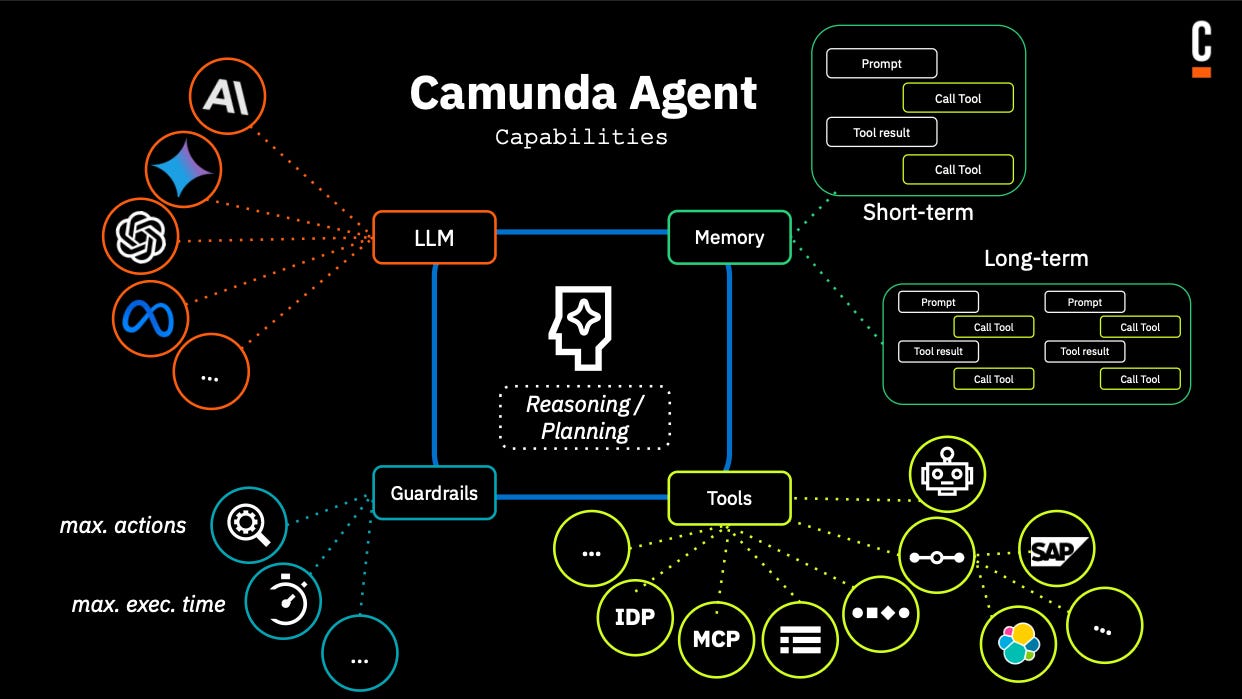

That’s the present-future that Camunda is promising in the next release. And Camunda spells it out conceptually in the following chart. First, leveraging LLMs - whichever ones you like. Second, short-term and long-term memory stores (where short-term is within the specific process instance, and long-term would be across all of your interactions). Third, Tools that can be leveraged to take action on behalf of the LLM’s feedback. Finally, Guardrails - in addition to the natural guardrails of a process definition, you also have additional guard rails to protect your resources.

But what about Agentic AI and Agentic Automation? Those are getting a lot of buzz right now.

Agentic AI

A lot of noise in this market has been about AI Agents: the promise is that you don’t need to understand your processes, or how things work, you just give your AI agents all they context they need and they will act on your behalf. The problem is that there are no guard rails. What actions are the agents allowed to take, or not allowed? What context should these agents have or need? Which context is relevant?

No one knows what these AI agents will do when set loose. From an enterprise perspective it feels like a disaster in terms of audibility, security, data security, and other risks. Will they persist over time to do your bidding? will their behavior veer from your intended results?

Lots of unknowns, and unknowables. But it doesn’t have to be this way.

Agentic Automation

Another source of noise in the market comes from the “formerly known as RPA” vendors, who are looking at AI Agents as a way to get more mileage out of their task automation technology. In the RPA sphere, they have automation, but it is lacking in business process context, and it is deterministic, task-oriented. It doesn’t deal well with probabilistic actions.

The notion of Agentic Automation is to give this task automation some intelligence via LLMs, and as a shortcut to address business process needs through an “Agent” rather than through a process. The idea being that the AI Agent will “know what to do” and then the task automation will do it, and the combination is Agentic Automation.

Your business isn’t theoretical - it runs on specific business processes which you can represent and run with process orchestration.

There’s Something Missing… the Process

The challenge is, they’re missing the key ingredient - a process. What does a process bring to the party?

Context - knowing what the goal of the process is, and what has come before, and what information is relevant to your AI Agents

Scaffolding - when should an Agent be activated? When is it done? When should a customer be consulted? when should a team member be consulted? What actions are available? What actions are note available? What impact do those actions have on the process? How do I connect the idea with an action? The process does all of this because it is your scaffolding.

Guard Rails - we don’t share more than we have to with the agent. So I’m no longer worried about proprietary or client information leaking out. We don’t allow the Agent to pick any action that is possible in the world, it is only allowed to pick from a prescribed list of actions that are well-defined. This greatly reduces the complexity of negative prompting etc.

Without the business process, implementing the right context, the right scaffolding, and the right guardrails is a really difficult task. Solving for that with the right prompt engineering or building bespoke software around your LLMs is daunting.

There’s no reason to re-invent the wheel. Ad-hoc processes were designed for BPMN to support humans coordinating work that didn’t happen in a precise, or predictable ordering:

It turns out that people are the worlds’ first Intelligent Agents, and we’ve been orchestrating their activities with processes for decades. Is it any surprise that processes are a fantastic way to coordinate AI agents that are acting where people would have before?

With Agentic Orchestration, to spell it out: we’re orchestrating AI Agents within the business process. Those agents are connected to actionable activities via BPMN. Those agents are connected to the right context of your business process, also via BPMN.

New Use Cases with Agentic Orchestration

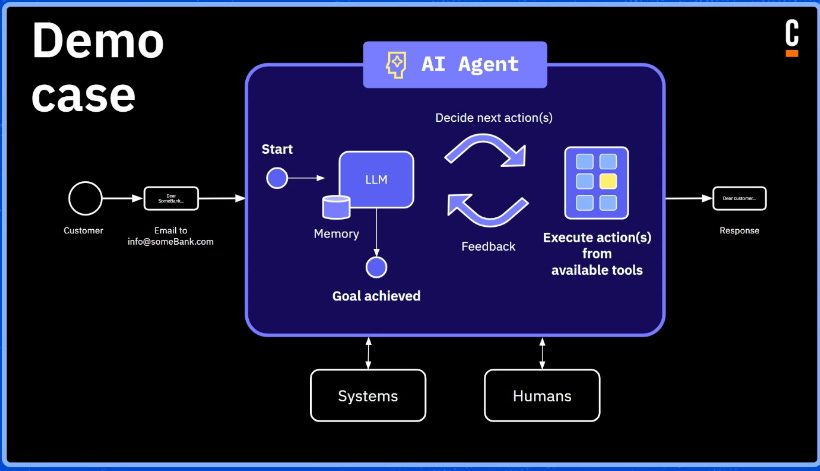

We are now unlocking a set of case management solutions that used to require human intervention to orchestrate, human-oriented applications, and bespoke software. Agentic orchestration makes it reasonable to have the user interface be email, or a messaging app, or actual forms (screens). With email or chat, you’re effectively having a conversation with your process (technically, with your AI Agent within that process), while it tries to get the job done for you.

The implications for self-service customer services scenarios are immense. Applications like compliance monitoring become good fits as well.

In a session with several Camunda partners, David Brakoniecki, BP3’s Chief Delivery Officer, presented a regulatory compliance use case. His talk begins at the 13 minute mark. And you don’t just have to imagine this solution, you can engage BP3 in deploying compliance solutions in your enterprise.

Agentic Orchestration allows us to do a proactive form of compliance, as well as a more complete form of compliance, rather than doing it after the fact, and via sampling. It completely changes the way you think about doing compliance in Pharma and Finance.

And that is just one of many new use cases that Agentic Orchestration will handle with style.

How it’s done

So how does Camunda choose to implement this capability? Not by writing a new engine, or dramatically changing how the product works. It turns out BPMN engines are already very powerful tools for orchestration. What is needed is the right conventions for AI Agents to. take the chair where previously a human agent would have. I think this speaks really well to the fact that Camunda’s process orchestration engine is good, foundational software, and as a result they’re able to leverage that foundation to add really interesting capabilities without breaking existing behavior.

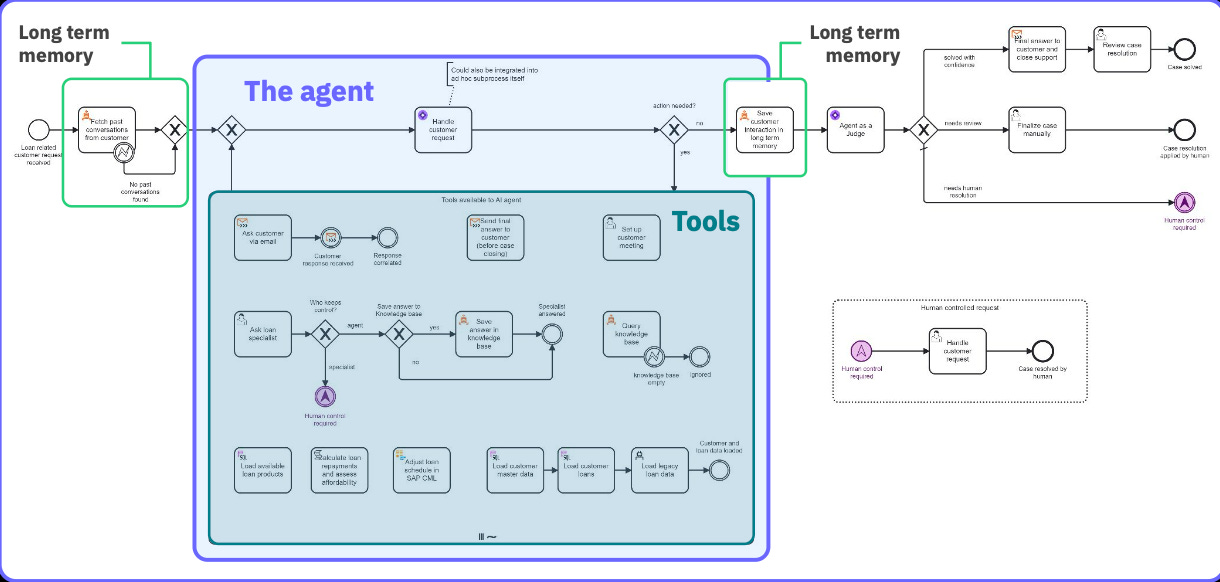

What you see pictured above, is an AI Agent represented by an Ad-hoc subprocess (a term that I assure you BPMN experts are quite familiar with, and common to case management scenarios). The above diagram is more of a marketing rendering of a technical architecture to capture the key elements, but an implementation-BPMN-accurate view is pictured below, using an example of a client asking for a home loan workout:

In the first activity, we fetch past conversations with this customer (long-term memory) - this is mirrored by storing to long-term memory when the AI Agent completes its work. The loop in-between two decision gateways is the interactive loop with our customer - where the Agent decides (with an LLM) what tools to use to handle each customer request. When we’ve met our exit conditions, the last decision gateway exits the loop. At this point we can put information to long term storage as referenced above, and we can take any final actions or reviews as the process moves on.

Each of the items in the Ad-hoc process is a tool that the AI Agent can leverage to work out the solution with our customer, as well as with internal staff. So there are activities to define setting up a meeting with the client (via email exchange), asking customers a question via email, asking a loan specialist to help, query loan products available (from another system), query knowledge base, and we can load customer-specific data.

From the customer’s point of view, this feels like an email exchange with the process. They simply write back to the request for meeting times, or for more details on their request. They use plain language in those emails, with no need to navigate a specific user interface, nor to install any applications.

I can already picture the benefit of having an agent like this tied to my processes when I’m the customer - and I just want to find out the current status of what is happening. Imagine if I could simply ask the process, and the AI Agent orchestration within that process could simply answer me with what is currently happening in my request? It could be a customer service game changer.

Additionally, you have access to all the process features a BPMN engine provides - the combination of directive, deterministic process flow with nondeterministic LLM interaction is really powerful.

Let’s focus for a moment on the tools available in the ad-hoc subprocess. The AI Agent will only act with the tools provided in that context, and only sees the data that the process definition makes available to the agent. In this example, knowledge base, loan details, loan products, and the customer’s own data.

This immediately prevents much of the angst that enterprises feel about rolling out AI tools to all of their people. Rolling out a co-pilot to your organization that reads every document they open, and every email the right - it is *very* hard to implement useful guardrails. You don’t know what that LLM or AI agent will see in your employees’ computers. Therefore it is hard to protect your data and your clients’ data correctly.

An Agentic Orchestration, however, loads just the data relevant to this particular case, and loads it to the Agent that we have designed and built - and we even control what gets put into long-term memory and what does not. Now we know what data needs protecting and how to protect it. It is much easier to pass your GDPR and other data privacy compliance when you can explain what and who have access to your data.

Agentic Orchestration flips the script because the unknowns become knowns, and we can solve for known use cases. It is very hard to solve for all theoretical use cases. Good news: your business isn’t theoretical - it runs on specific business processes which you can represent and run with process orchestration, like Camunda.

It’s a whole new world out there

In summary:

Agentic AI and Agentic Automation both fail to leverage business processes. Therefore, their behaviors in your business are a bit unpredictable and difficult to constrain (or enable) in the right way.

Co-pilot style AI is also difficult to put guardrails on, especially with regard to protecting information.

Your business isn’t theoretical - it runs on business processes. So we don't have to accept abstract notions of what some AI agent should be allowed to do. We can look at our specific processes and design what AI Agents are expected to do.

People were the first intelligent agents, and our businesses greatly benefited from orchestrating their actions and decisions with processes. We’ve been doing this for decades! It only makes sense that AI Agents need this process orchestration scaffolding even more than people do.

You can have a conversation with your process - it doesn’t have to be non-deterministic where it leads, we can combine the probabilistic nature of LLMs with the deterministic nature of processes - to create new ways of executing processes.

Camunda has really opened up a world of new use cases that used to be an awkward fit for process alone - and were not a fit at all for AI alone or Automation alone. The choreography of AI Agent technology (LLM etc) and process orchestration technology (ad-hoc subprocesses, activities, BPMN primitives), leads to a great solution.

Exciting times ahead.

You can find the whole day 2 keynote that covers this material at CamundaCon, right here.

Such a powerful analysis about how AI agents need a context. It's magical thinking that AI agents could do something useful all on their own, atomically.

This quote is great:

"People were the first intelligent agents, and our businesses greatly benefited from orchestrating their actions and decisions with processes. We’ve been doing this for decades! It only makes sense that AI Agents need this process orchestration scaffolding even more than people do."

There's a lot work to be done though, to realize the promise. My evidence is in part the use of the word "desperate" in your headline 😂