AI and the Great Filter

Only 5% of AI projects were reported to achieve meaningful return on investment - what does that mean if you're in the 5%?

On the heels of the last Apple research paper on why and how LLMs lack real reasoning power, MIT has set the AI world afire with its latest study on AI project failure rates.

An astonishing 95% of AI projects fail.

Fortune Magazine summarizes the findings, and supplements with an interview with the author, very nicely in this article.

80% of organizations explore AI tools

60% evaluate enterprise solutions

20% launch pilots

A mere 5% reach production with measurable impact…

This makes the failure rates of IT projects generally, and of offshore projects, pale by comparison. A 95% failure rate is astonishing.

This sets up the premise of the title of this post: the concept of the Great AI Filter.

Understanding Failure

But first, let’s understand these failures and why they occur. The paper itself - and the *many* articles and blog posts summarizing and re-summarizing it, do a good job of this. Here are the ones that resonated with me:

Low ROI is responsible for some of these failures. In my long experience of working with clients to adopt new technology, the most common reason for this is not the technology, but the use case. Often, to avoid risk, management teams will choose a use case that simply has no value, except as a proof of concept. The problem is, that the lack of value itself can kill off the technology adoption. Your organization has a finite amount of focus - don’t squander it on projects that don’t matter!

Despite widespread investment, there are minimal outcomes to speak of. The reasons appear to be related to the gap between reality and hype, but also to maturity issues like integration and data security.

The “learning gap” is too large - in other words, the organization simply lacks the capability to foster and grow their use of AI.

Seth Levine’s article on this subject explains one type of failure exceptionally well, and it is one I have seen in the field. Seth calls it the “Rollout Trap”

In his words, the vendor hype cycle leads to inflated expectations, and prototypes are quick and shiny with the help of generative AI coding tools. Leaders then focus on quick wins for ROI, chasing headcount reductions rather than building capability.

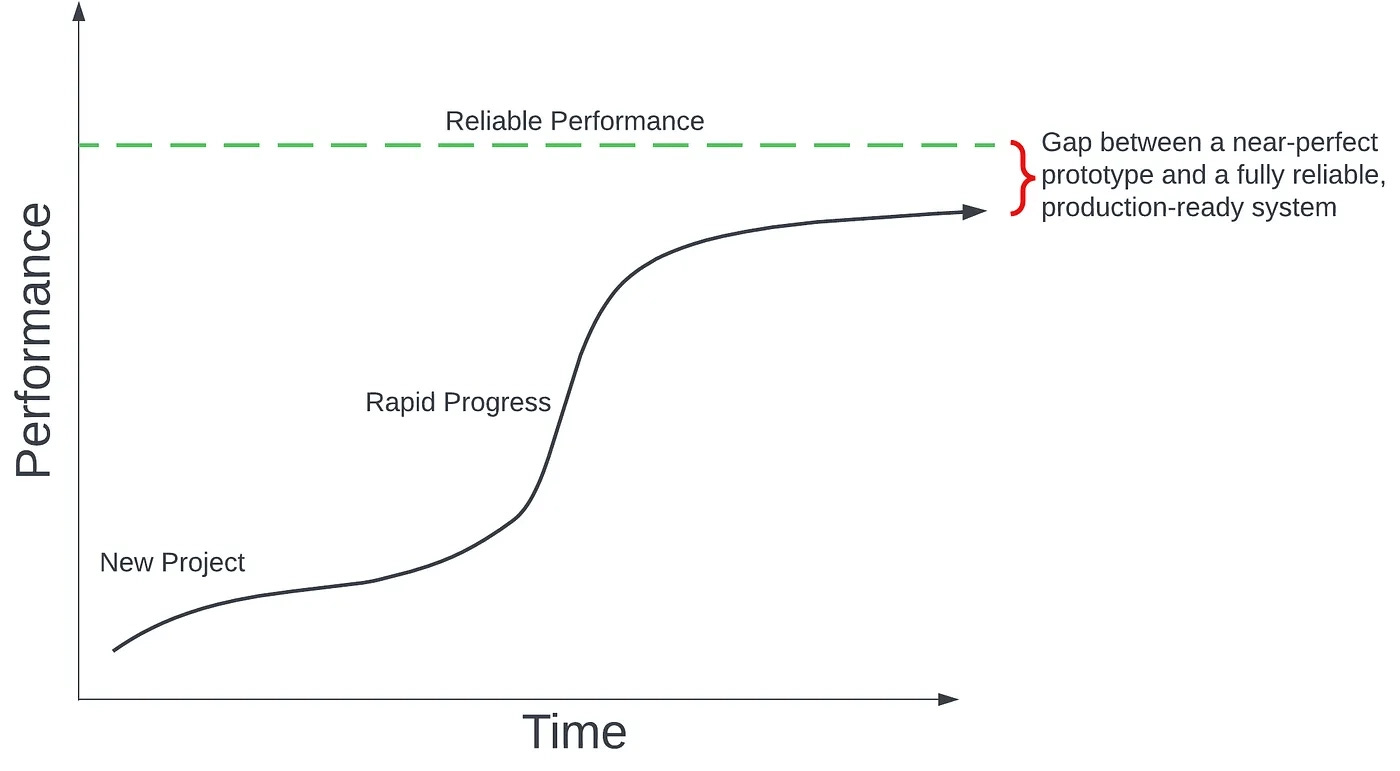

And the challenge comes when you have a solution that is “pretty close” - but does not meet your operating goals. You can’t use it until it meets or exceeds your operating threshold. Seth labels that “reliable performance”, but it could be labeled as “precision” or “accuracy”. An LLM gets you to 80% quickly, but you need 99% accuracy - and you just can’t get there.

Think of AI as automation, rather than intelligence

In a normal software project, if you’re 80% there, or 85% there, then the rest is just the slog to production. You can definitely just put the resources into deployment and scaling if you truly are that close. But with a generative AI approach, that’s not the case - you are likely approaching an asymptotic limit…

You can keep shoving investment into an LLM and it won’t quite get you there if it is inherently an incorrect or incomplete approach to the problem you’re solving. In the same way, if you try to solve a problem with first order predicate logic rules - you will run across some problems that are intractable to represent this way.

In a conversation with former colleagues, we were discussing these failures and why they happen, and I jotted some thoughts down to share here.

Magical Thinking

I think a lot of these failures are a result of magical thinking, in a perfect storm of hype and pressure to keep up.

Hype Hype Hype. The press and many tech execs have been relentlessly pumping their AI tools and how transformative they’ll be - even bragging about how much of their code is written by AI (link to Exponential Growth article for more, below), or how many people they can now lay off. Also: the demos and prototypes are slick - and even useful for normal consumer behavior.

Anthropomorphizing. Tech execs have also been relentlessly anthropomorphizing AI tools and techniques. This inevitably leads companies (and people) to make mistakes in terms of how they use them, aiming for too wide an aperture instead of focusing in.

Pressure to keep up. Typical non-tech companies are feeling the pressure to keep up with not only their competitors but also with the expectations their own teams and executives have for what consumer AI tech is doing, which again, feels magical - but from an enterprise perspective is like a good demo.

Maslow’s hammer. The error rate for generative AI is real, and people keep trying to use them for applications that don’t tolerate a high error rate. Or, fail to use a more appropriate technique for the parts that need to be accurate, and a generative technique for the parts that are more forgiving. A lot of this is “golden hammer” stuff (everything is a nail! Also, I like the name Maslow’s Hammer even better). When I was running BP3, saw a lot of companies trying to use LLMs to do what decision automation software is really good at - and then utterly failing when error rates go from 1% to 20%, and the goal was .001%. (This relates to precisely Seth Levin’s point on the “roll-out trap”. )

Humans are allowed to err, automation software is not. Leaders need to understand that people are allowed to make mistakes, but automation is not. We don’t accept mistakes from calculators or excel spreadsheets or nearly any other kind of software. On the other hand, the highest-customer-service rankings, reputationally speaking, go to organizations that make mistakes, but then do a GREAT job of addressing them. Hotels and restaurants that never screw up, actually never get a chance to demonstrate that “next level” of customer service making it right for a customer. So they score well, but not at the top.

LLMs are not people. LLMs are not Junior people, either. Repeat after me. Thinking in advance how an LLM can do “what a junior person could do” (insert your own flavor of this - junior developer, etc.). but an LLM isn’t a person. It does specific things, it doesn’t learn from your preferences very well (there’s only so much context). A person will learn how to work with you effectively. Basically, you have a nail gun. It can help you do certain things a lot faster, and other things, not at all (or badly!). if you use a nail gun to hang your windows.. .you might break some glass.

LLMs won’t really learn on the job. They won’t be better at the job you instruct them to do on day 10, or day 100. Nor do it without you explicitly telling them, the way an employee would. Maybe you will get better at micromanaging them with ever more specific instructions. Maybe not.

Learn locally, together. Offshoring stuff that should be done locally where everyone can meet/talk/interact a lot is a mistake. You’re learning something new as an organization and you either need to be very skilled at doing so remotely, or do it locally, with the help of local expertise!

Undervaluing the quality of the humans whom you need to run these programs successfully. All too often, I have seen companies get “cheap” with the consultants they hire, and the people they employ, when rolling out transformative technologies. It never ends well.

Not spending time on designing what the desired outcomes are - with some tech-informed UI/UX design types involved. Do the user research (thank you John Morkes for education me on that concept and the value it has offered). Without this it is often a bit rudderless and undirected experimenting rather than focused on an outcome. A shameless plug here: BP3 is an excellent partner for helping you work through this.

Back to Maslow’s Hammer. Use the right tools for the job, and a lot of times the right tools aren’t the ones we came to the party with. Use the right ones. Use them together and in combination. Especially in combination. You’ll be amazed what you can build.

Those are the mistakes (and some advice mixed in), from my experience. More examples? comment below!

My Advice

I’ll offer two more nuggets of advice from my perspective:

First, think of AI not as artificial intelligence - you’ll easily walk down the anthropomorphism trap - think of it as automation. And for each AI tool, what does it automate for you? Write it down in a sentence. Now you’re demystifying it. LLMs and generative AI open the doors to many more things being automated.

Second, don’t start by designing how to remove people from your team, program, company, process, or whatever… start by designing for people. Ask and answer these questions for yourself:

How to make that person the best that they could be with AI?

How do we make the average person in that role more like a star with AI?

How do we elevate everyone’s capability with AI?

How do we make this software a great experience for that person with AI?

How do we make the team better with AI?

How can we empower them with new capabilities and responsibilities with AI?

You should ask these questions with any software program or process improvement program - and drop the “with AI” ending to get the same mindset. Try it!

If you start with job/person elimination, you tend to design things that the people you retain will hate using. Your lack of care for the humans in your organization will show through, as corporate intent always does… and your lack of care will be implicitly and explicitly communicated through the ranks of executives, IT, and business. And that is a path to join the 95% of AI project failures…

Focusing on your humans is the recipe for success…

Back to the Great Filter

The Great Filter is an idea that, in the development of life from the earliest stages of abiogenesis to reaching the highest levels of development on the Kardashev scale, there is a barrier to development that makes detectable extraterrestrial life exceedingly rare.[1][2] The Great Filter is one possible resolution of the Fermi paradox. The main conclusion of the Great Filter is that there is an inverse correlation between the probability that other life could evolve to the present stage in which humanity is, and the chances of humanity to survive in the future.

What does this have to do with AI and your company, you might ask?

Well, if you can break through the 5% filter - and build a team with the capability to deploy AI successfully and generate value for your company with AI - then you are going to be better than 95% of the companies out there that you might compete with. The winners have a chance to capture outsized gains in this environment, because one thing the hype is not wrong about: AI is transformative technology with immense potential for all of us.

I suspect, if these percentages do not improve, we will see a real separation of Haves and Have-nots in the corporate world - those who successfully leverage AI to improve their business dramatically, and those who don’t.

The first step, is to define what “improve your business dramatically” means for you. If your motivation starts with layoffs, my guess is that you’ll have a hard time finding the results you’re looking for, because your design point is wrong. It is *hard* to replace what humans do, but it is *easier* to help humans be better at what they do.

Great post! Two additional thoughts...

1. "80% of organizations explore AI tools". As has always been the case. tools should be step 4 or 5 in you AI plan and not step 1. Starting with tools is inherently limiting in defining business cases, and can lead to confusion among the folks outside IT.

2. Antropomorphizing is a REAL problem. The opportunity is't to optimize an existing process, and doing so will lead to nominal ROI. The opportunity is to reimagine the process and focus on better ways to achieve outcomes. Recall our shared client that wanted to automate gas forecasting. The first business case was automating human tasks resulting in savings of a few hundred thousand dollars. The real business case was the application of AI to weather forecasting and forward contracts where the ROI was in the many millions.